Accelerating deep learning with high energy efficiency: From microchip to physical systems

Author: Huanhao Li, Zhipeng Yu, Qi Zhao, Tianting Zhong, Puxiang Lai*

Corresponding author: Puxiang Lai* (Department of Biomedical Engineering, Hong Kong Polytechnic University) puxiang.lai@polyu.edu.hk

DOI: 10.1016/j.xinn.2022.100252

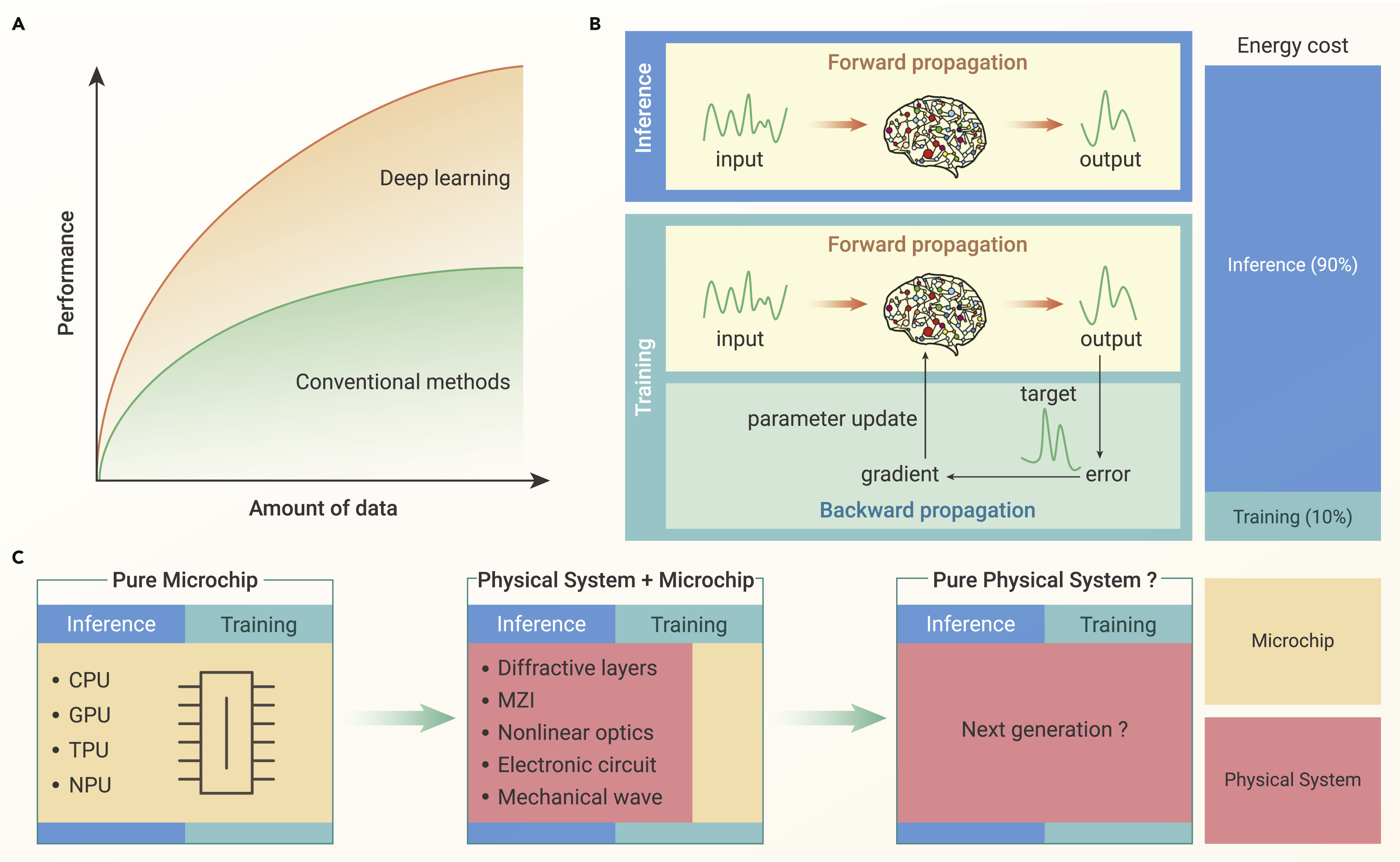

Abstract: In the era of digits and internet, massive data have been continuously generated from a variety of sources, including video, photo, audio, text, internet of things, etc. It is intuitive that more accurate patterns can be obtained by feeding more data for effective analysis; despite the data redundancy, a clearer picture can be delineated for better decision-making. However, traditional methods, even in machine learning, do not benefit from the expanding amount of data, whose performance nearly saturates when the data collection is large enough (Figure 1A). Such a dilemma emerges due to their limited capability and insufficient supply of computation power in the past.

Various accelerators have been developed to improve DNN computation regarding processing speed as well as energy efficiency. An overall tendency of the computation platforms has been seen, evolving from conventional electronics to physical systems, with progressive reductions in energy consumption. While there are limitations for current realizations of physical systems, vigorous development is strongly looked forward to to continuously improve energy efficiency with the desired performance.

Diagram of deep learning realizations (A) Comparisons between deep-learning and conventional methods

on how the amount of the data affects the performance. (B) Procedures (inference, backward propagation

including error/gradient calculation and parameter update) contained in deep learning could be accelerated.

The side bar denotes the energy cost for the inference (90%) and training (10%) phases. (C) Development

trend of the DNN accelerator from pure microchip to physical system. MZI, Mach-Zehnder interferometers.

Diagram of deep learning realizations (A) Comparisons between deep-learning and conventional methods

on how the amount of the data affects the performance. (B) Procedures (inference, backward propagation

including error/gradient calculation and parameter update) contained in deep learning could be accelerated.

The side bar denotes the energy cost for the inference (90%) and training (10%) phases. (C) Development

trend of the DNN accelerator from pure microchip to physical system. MZI, Mach-Zehnder interferometers.