Extended Learning Robustness for High-Fidelity Human Face Imaging from Spatiotemporally Decorrelated Speckles

Author: Qi Zhao†, Huanhao Li†, Tianting Zhong†, Shengfu Cheng, Haofan Huang, Haoran Li, Jing Yao, Wenzhao Li, Chi Man Woo, Lei Gong, Yuanjin Zheng*, Zhipeng Yu*, Puxiang Lai*

Corresponding author: Yuanjin Zheng* (School of Electrical and Electronic Engineering, Nanyang Technological University) yjzheng@ntu.edu.sg

Corresponding author: Zhipeng Yu* (Department of Biomedical Engineering, Hong Kong Polytechnic University) yu.zh.yu@polyu.edu.hk

Corresponding author: Puxiang Lai* (Department of Biomedical Engineering, Hong Kong Polytechnic University) puxiang.lai@polyu.edu.hk

Abstract: Imaging within or through scattering media has long been a coveted yet challenging pursuit. Researchers have made significant progress in extracting target information from speckles, primarily by characterizing the transmission matrix of the scattering medium or employing neural networks. However, the fidelity of the retrieved images is compromised when the medium’s status changes due to intrinsic motion or external perturbations. This variability leads to decorrelation between training and testing data, hindering the practical applications of these frameworks. In this study, we propose a generative adversarial network (GAN)-based framework with extended robustness, which is designed to address the spatiotemporal instabilities of scattering media and the resultant decorrelation between training and testing data. Experiments demonstrate that our GAN can retrieve high-fidelity face images from speckles, even when the scattering medium undergoes unknown changes after training. Notably, our GAN outperforms existing methods by non-holographically retrieving images from unstable scattering media and effectively addressing speckle decorrelation, even after prolonged system inactivity (up to 37 h in experiments, but can be longer if needed). This resilience opens venues for pre-trained networks to maintain effectiveness over time, and can broaden the scope of learning-based methodologies in deep tissue imaging and sensing under extreme environmental conditions.

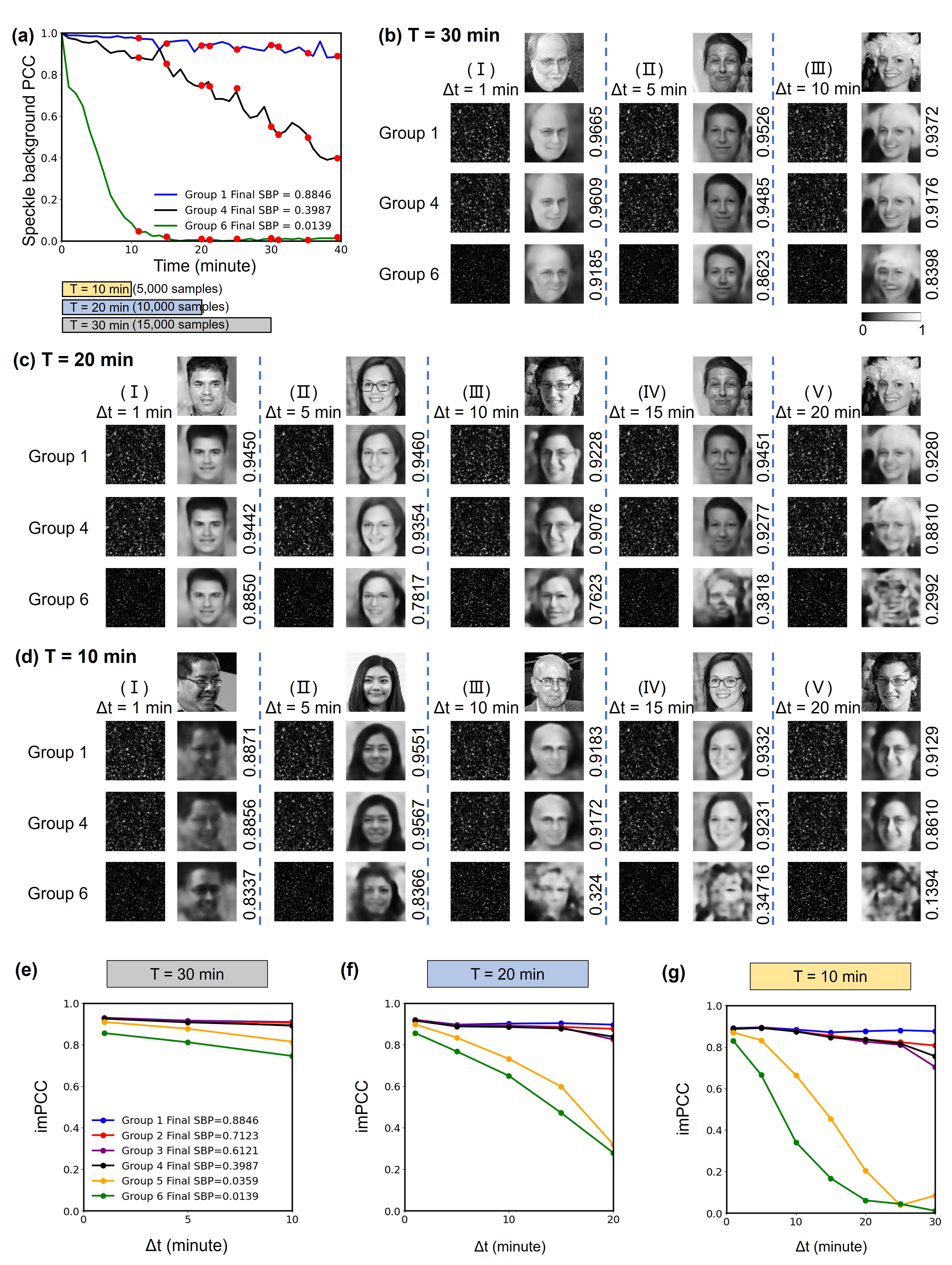

Retrieved images from speckles with different training durations T and different time intervals Δt between training and testing datasets. a) Datasets: Group 1, Group 4, Group 6 are divided according to training dataset durations T, including 10, 20, 30 min. The speckle background PCC of the testing datasets is marked as red points. b–d) Retrieved images from speckles: the top row of each column is the ground truth image; the right columns on other rows represent the corresponding retrieved images by inputting the speckles in the left columns into the generator of the GAN; the numbers next to retrieved images are PCCs between the retrieved images and the corresponding ground truth images; the ground truth images are selected from FFHQ dataset.[40] e–g) imPCC (average PCC between the retrieved images and the corresponding ground truths) versus different time intervals for training dataset duration T = 30, 20, 10 min, respectively. Ground truth image in (b-I): Copyright 2009, 100_1861, by Daniel Taylor, Flickr (https://www.flickr.com/photos/dtaylor404/3920892082/); the original images are cropped and converted to grayscale, under terms of the CC-BY 2.0 license. Ground truth images (b-II) and (c-IV): Copyright 2010, Living Room Comedy 101 052810, by Alex Erde, Flickr (https://www.flickr.com/photos/alexerde/4682485372/); the original images are cropped and converted to grayscale, under terms of the CC-BY 2.0 license. Ground truth images (b-III) and (c-V): Copyright 2015, Naughty Snowball IV-128, by PJ Rey, Flickr (https://www.flickr.com/photos/pjrey/8261366051/); the original images are cropped and converted to grayscale, under terms of the CC-BY 2.0 license. Ground truth images (c-I): Copyright 2016, Fr15, by Európa Pont, Flickr (https://www.flickr.com/photos/europapont/26666567983/); the original images are cropped and converted to grayscale, under terms of the CC-BY 2.0 license. Ground truth images (c-II) and (d-IV): Copyright 2017, Henriette Sørbøe Andreassen, by Senterpartiet (Sp) (https://www.flickr.com/photos/senterpartiet/32951636095/); the original images are cropped and converted to grayscale, under terms of the CC-BY 2.0 license. Ground truth images (c-III) and (d-V): Copyright 2009, them again, by Josh, Flickr (https://www.flickr.com/photos/iheartphoto/204002570/); the original images are cropped and converted to grayscale, under terms of the CC-BY 2.0 license. Ground truth image (d-I): Copyright 2014, Demand14 Speakers, by Cision Global, Flickr (https://www.flickr.com/photos/vocus/14439031135/); the original images are cropped and converted to gray-scale, under terms of the CC-BY 2.0 license. Ground truth image (d-II): Copyright 2018, by sirokame000, Flickr (https://www.flickr.com/photos/95949782@N03/27719339539/); the original images are cropped and converted to gray-scale, under terms of the CC-BY 2.0 license. Ground truth image (d-III): Copyright 2018, Mosman Faces launch, by Mosman Library, Flickr (https://www.flickr.com/photos/mosmanlibrary/5760074593/); the original images are cropped and converted to gray-scale, under terms of the CC-BY 2.0 license.

Related papers

Decorrelation in Complex Wave Scattering

Qihang Zhang, Haoyu Yue, Ninghe Liu, Danlin Xu, Renjie Zhou, Liangcai Cao, George Barbastathis*

04/25 arXiv arXiv: 2504.11330

Codes & Data:

Codes of GAN: https://github.com/863zq/863zq.github.io/blob/main/Code/Main_complex_GAN.py

Speckle data: https://doi.org/10.60933/PRDR/FY7EDJ